Dataset: Simulated interactions between a customer and shopkeeper in a camera shop scenario

We have presented a fully autonomous method that enables a simulated robot to learn behaviors from examples of simulated interactions between customers and a shopkeeper, without input from designers nor manual annotation of the training data. Specifically, we focus on the robot's ability to generate actions that depend on memories of the customer's previous actions. For example, a shopkeeper robot should learn to make appropriate recommendations to a customer based on their previously stated preferences.

Similar methods (cited below) have been demonstrated to work on data collected from live interactions between human participants playing the roles of customer and shopkeeper. However, in this work we sought to solve the problem of learning to generate memory-dependent actions, which were infrequent in the previously collected human-human dataset. Therefore, we simulated a dataset that contains many examples of such actions.

The purpose of this page is to share the dataset we have simulated.

Scenario

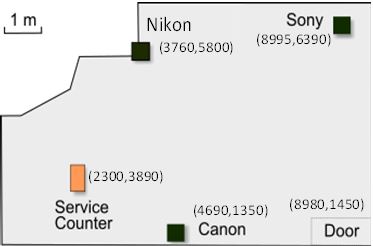

We chose a camera shop scenario as has been presented in the previous work. Our data-driven technique is designed to imitate the repeatable, formulaic behaviors found in many customer service domains. Therefore, the camera shop is an ideal scenario for demonstrating the technique. The simulated camera shop contains three cameras (Fig. 1), one customer, and one shopkeeper.

|

| Figure 1. Map of the simulated camera shop. The numbers in the brackets indicate the position of the camera. |

Each simulated interaction starts with the customer at the door and the shopkeeper at the service counter. The customer then enters and approaches one of the three cameras or the shopkeeper. The shopkeeper may approach a browsing customer to assist them, by asking their preferences, answering questions, or introducing the cameras and their features. In some cases the customer may state they simply wish to browse. Otherwise, the customer may ask about the cameras or state their preferences. The interaction proceeds until the customer decides to leave the store.

Details on the simulation technique can be found in the paper referenced below.

Dataset

The dataset contains 50,000 simulated interactions. The first 75% of the interactions were used for training and the remaining 25% were used for testing. In addition to the simulated data, we have also included the proposed system's (and baseline system's) action predictions. Furthermore, the first 500 instances from the testing set were used in a human evaluation of the systems' output actions. These evaluations are also included.

The data are provided as CSV files. More details on the contents of each file can be found in 'README.txt'.

Dataset file [51.9 MB]

License

The datasets are free to use for research purposes only.

In case you use the datasets in your work please be sure to cite the reference below.

Reference

- Doering, M, Kanda, T, & Ishiguro, H., Neural network based memory for a social robot: Learning a memory model of human behavior from data, ACM Transactions on Human-Robot Interaction (under review).

Related Papers

You might also like to check out our other work where we reproduce autonomous robot behaviors from human-human interaction:

-

Doering, M, Glas, D. F., & Ishiguro, H.,

Modeling Interaction Structure for Robot Imitation Learning of Human Social Behavior,

IEEE Transactions on Human-Machine Systems, February 2019.

doi:10.1109/THMS.2019.2895753 -

Doering, M, Liu, P., Glas, D. F., Kanda, T., Kulic, D, & Ishiguro, H.,

Curiosity did not kill the robot: A curiosity-based learning system for a shopkeeper robot,

ACM Transactions on Human-Robot Interaction, in press.

-

Liu, P., Glas, D. F., Kanda, T., & Ishiguro, H.,

Learning proactive behavior for interactive social robots,

Autonomous Robots, November 2017.

doi:10.1007/s10514-017-9671-8 -

Liu, P., Glas, D. F., Kanda, T., & Ishiguro, H.,

Data-Driven HRI: Learning Social Behaviors by Example from Human-Human Interaction,

IEEE Transactions on Robotics, Vol. 32, No. 4, pp. 988-1008, 2016.

doi:10.1109/tro.2016.2588880

Inquiries and feedback

For any questions concerning the datasets please contact: malcolm.doering@atr.jp